Getting Started with Apache Hudi: Simplifying Big Data Management and Processing

What is Apache Hudi?

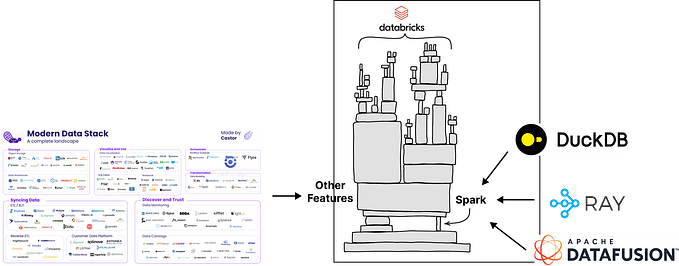

Apache Hudi (Hadoop Upserts Deletes and Incrementals) is an open-source data management framework that simplifies working with large datasets in big data systems like Apache Hadoop or Apache Spark. It provides efficient and scalable solutions for data ingestion, storage, and incremental processing.

Apache Hudi Architecture

The architecture of Apache Hudi revolves around its core components, which enable efficient data management and processing. Here is an overview of the Apache Hudi architecture:

- Write Path:

- Data Source: Apache Hudi supports various data sources, including batch data from files or streaming data from sources like Apache Kafka or Apache Flume.

- Ingestion: The data is ingested into Apache Hudi using connectors or APIs, which convert the input data into Hudi records.

- Record Key and Partitioning: Apache Hudi assigns a unique record key to each record and partitions the data based on specified fields (e.g., date, region) to distribute it across the storage system.

- Hoodie Table: Apache Hudi organizes the data into a logical construct called a “Hoodie Table,” which consists of a collection of data files (also known as “parquet files”) and an index.

- Copy-on-Write: Apache Hudi uses a copy-on-write mechanism to handle updates. When a record is updated, a new version of the entire affected file is created, and the previous version is marked as obsolete.

- Indexing: Apache Hudi maintains an index that maps record keys to their corresponding file locations, allowing for efficient record-level access and updates.

2. Read Path:

- Query Engine Integration: Apache Hudi integrates with query engines like Apache Hive, Apache Spark, or Presto, enabling users to run SQL queries and perform analytics on the data stored in Hudi.

- Incremental Processing: Apache Hudi supports incremental processing, which involves efficiently processing only the new or modified data instead of reprocessing the entire dataset. It achieves this through file-level and record-level change tracking.

3. Storage Layer:

- Apache Parquet: Apache Hudi leverages Apache Parquet, a columnar storage format, for efficient storage and compression. It allows for fast query performance and optimization of storage space.

- Distributed File System (DFS): Apache Hudi integrates with popular distributed file systems like Apache Hadoop Distributed File System (HDFS) or Amazon S3, where the data files and metadata are stored.

4. Consistency Guarantees:

- Write Consistency: Apache Hudi provides options for write consistency, allowing users to choose between “Immediate” or “Eventual” consistency models based on their requirements.

- Index Consistency: Apache Hudi ensures index consistency by employing write-ahead logs and crash recovery mechanisms.

The Apache Hudi architecture enables efficient data ingestion, storage, updates, and querying in large-scale data environments. It simplifies managing big data workloads and provides features like incremental processing and efficient upserts and deletes.

Please note that this is a high-level overview of the Apache Hudi architecture, and there may be additional details and components specific to different versions or extensions of Apache Hudi. For more in-depth information, it is recommended to refer to the official Apache Hudi documentation and resources.

Understanding Apache Hudi

To understand Apache Hudi, let’s consider a simple use case:

Imagine you work for an e-commerce company that receives a massive amount of customer data every day. This data includes information about purchases, customer profiles, and product details. You need to process this data efficiently and ensure that you can easily update, delete, and query the data as needed.

Here’s how Apache Hudi can help:

- Data Ingestion: Apache Hudi allows you to ingest large volumes of data into your system. You can use Apache Hudi’s connectors or APIs to load data from various sources like databases, files, or streaming platforms. For example, you can load customer purchase data from a CSV file.

- Data Storage: Apache Hudi organizes the data into optimized file formats, such as Apache Parquet or Apache Avro. These formats provide efficient compression and columnar storage, enabling fast querying and analytics.

- Upserts and Deletes: Apache Hudi supports efficient updates (upserts) and deletions of individual records in large datasets. For instance, if a customer updates their shipping address, you can use Apache Hudi to update only the affected record, instead of rewriting the entire dataset. This saves time and resources.

- Incremental Processing: Apache Hudi enables incremental processing, which means you can efficiently process only the new or modified data instead of reprocessing the entire dataset. For example, you can analyze the daily sales data without having to process historical data repeatedly.

- Querying and Analytics: Apache Hudi integrates seamlessly with tools like Apache Hive, Apache Spark, or Presto, allowing you to run SQL queries and perform analytics on your data. You can easily query the most up-to-date data or access historical versions of records.

In summary, Apache Hudi simplifies working with large datasets by providing efficient data ingestion, storage, and incremental processing capabilities. It allows for updates, deletions, and querying of data, while optimizing performance and resource utilization.

Overall, Apache Hudi is a powerful tool for building scalable data processing pipelines and managing big data effectively. It is commonly used in scenarios like real-time analytics, data lake ingestion, and data integration across various sources.

Here are the explanations for each of the five points with references:

- Data Ingestion: Apache Hudi provides connectors and APIs to facilitate the ingestion of data from various sources. It supports popular data sources such as Apache Kafka, Apache Flume, Apache Nifi, and more. These connectors enable seamless integration and efficient data loading into Apache Hudi. You can refer to the Apache Hudi documentation for more details on data ingestion: Apache Hudi — Data Ingestion

- Data Storage: Apache Hudi organizes data into optimized file formats such as Apache Parquet or Apache Avro. These file formats offer efficient compression and columnar storage, enabling faster data access and query performance. By leveraging these formats, Apache Hudi optimizes storage efficiency while maintaining data integrity. You can find more information about data storage in Apache Hudi’s documentation: Apache Hudi — Data Storage

- Upserts and Deletes: Apache Hudi allows for efficient updates (upserts) and deletions of individual records in large datasets. It employs techniques like copy-on-write and record-level indexing to achieve high-performance updates and deletes. This means you can update or delete specific records without rewriting the entire dataset. The Apache Hudi documentation provides detailed information on how upserts and deletes work: Apache Hudi — Upserts and Deletes

- Incremental Processing: Apache Hudi enables incremental processing, which means you can process only the new or modified data instead of reprocessing the entire dataset. It achieves this through mechanisms like file-level and record-level change tracking. This allows for efficient data processing and reduces the overall processing time. You can refer to the Apache Hudi documentation to learn more about incremental processing: Apache Hudi — Incremental Processing

- Querying and Analytics: Apache Hudi integrates seamlessly with popular data processing frameworks like Apache Hive, Apache Spark, and Presto. This enables you to run SQL queries and perform analytics on your data stored in Apache Hudi. You can leverage the power of these frameworks to easily access the most up-to-date data or query historical versions of records. The Apache Hudi documentation provides examples and guides on querying data using different frameworks: Apache Hudi — Querying Data

These references should provide you with detailed information on each aspect of Apache Hudi and help you explore further as you dive into using Apache Hudi in your data management and processing workflows.

How to install and start working?

To install and start working with Apache Hudi, you need to follow these steps:

- Set up a Hadoop or Spark Cluster: Apache Hudi is typically used in conjunction with Hadoop or Spark. Set up a Hadoop or Spark cluster, either in a single-node or distributed mode, depending on your requirements.

2. Install Apache Hudi Dependencies: To work with Apache Hudi, you need to install the necessary dependencies. Here are the main dependencies:

- Apache Hudi itself: You can download the Apache Hudi package from the official Apache Hudi website or use a package manager like Maven or Gradle to include it in your project.

- Apache Spark: Install Apache Spark if you plan to use it as the processing engine with Hudi. You can download Spark from the official Apache Spark website.

- PySpark: If you prefer to use Python, make sure you have PySpark installed. You can install it using pip:

pip install pyspark.

3. Set up the Environment: Configure your development environment to work with Apache Hudi. This may include setting environment variables, adjusting Spark configurations, and ensuring that your cluster is accessible.

4. Start Coding: Once you have set up your environment, you can start coding using Apache Hudi in your preferred programming language. For example, you can write Scala, Java, or Python code to interact with Apache Hudi.

- In Scala or Java, you can import the necessary Hudi classes and start using Hudi APIs.

- In Python, you can use the PySpark API to interact with Apache Hudi. Import the necessary modules and functions, and write PySpark code to create, read, update, or delete data using Hudi.

5. Build and Run: Once you have written your code, you can build your application using the appropriate build tool (e.g., Maven or Gradle) and execute it on your Hadoop or Spark cluster.

- For Scala or Java, you can build a JAR file and submit it to the cluster using the

spark-submitcommand. - For Python, you can execute your PySpark code using the

spark-submitcommand or run it interactively using the PySpark shell.

It’s important to refer to the Apache Hudi documentation and resources for detailed instructions, examples, and best practices. The documentation provides comprehensive information on installation, setup, API usage, and code samples. You can find the official Apache Hudi documentation here: Apache Hudi Documentation

Additionally, you can explore the Apache Hudi GitHub repository, which contains examples, tutorials, and community-contributed resources: Apache Hudi GitHub Repository

Remember to adapt the installation and setup steps based on your specific environment and requirements.

Explanation of the above 5 points (under Understanding Apache Hudi)

Here’s a working Python code example that demonstrates the five points we discussed using Apache Hudi:

Creating Spark Session

from pyspark.sql import SparkSession

from pyspark.sql.functions import col

from hudi import *

# Create a Spark session

spark = SparkSession.builder.appName("ApacheHudiExample").config("spark.serializer", "org.apache.spark.serializer.KryoSerializer").getOrCreate()1. Data Ingestion

# Load data from a CSV file

df = spark.read.csv("customer_data.csv", header=True)

# Convert DataFrame to Hudi dataset

hudi_dataset = df.write.format("org.apache.hudi").option("hoodie.datasource.write.recordkey.field", "customer_id").option("hoodie.datasource.write.partitionpath.field", "date").option("hoodie.datasource.write.table.name", "customer_data").mode("overwrite").save("customer_data.hudi")2. Data Storage

# Apache Hudi stores data in optimized file formats like Parquet or Avro

hudi_df = spark.read.format("org.apache.hudi").load("customer_data.hudi")

hudi_df.show()3. Upserts and Deletes

# Update a specific record

hudi_dataset = hudi_df.filter(col("customer_id") == "12345").withColumn("email", "updated_email@example.com").write.format("org.apache.hudi").option("hoodie.datasource.write.operation", "upsert").mode("append").save("customer_data.hudi")4. Incremental Processing

# Process only the new or modified data

incremental_df = spark.read.format("org.apache.hudi").option("hoodie.datasource.read.optimized", "true").load("customer_data.hudi")

incremental_df.show()5. Querying and Analytics

# Run SQL queries on Hudi dataset

incremental_df.createOrReplaceTempView("customer_table")

query_result = spark.sql("SELECT * FROM customer_table WHERE date = '2023-05-17'")

query_result.show()Make sure to install the required dependencies, such as PySpark and the Apache Hudi Python package, before running this code. You may need to adjust the paths and options based on your specific use case.

This code demonstrates loading data from a CSV file into Apache Hudi, performing upserts and deletes, processing incremental data, and running SQL queries on the Hudi dataset. You can customize it according to your dataset and requirements.

Note: This is a simplified example to showcase the concepts. In a real-world scenario, you may need to handle error cases, schema evolution, and other considerations.

Q/A

i. What is Apache Hudi used for?

Ans: Apache Hudi is an open-source data management framework used to simplify incremental data processing and data pipeline development. This framework more efficiently manages business requirements like data lifecycle and improves data quality.

ii. Who started Apache Hudi?

Ans: Uber submitted Hudi to the Apache software foundation in 2019, releasing it as Open-Source software and forming a community around it. Since Uber created Hudi, other large data-rich organizations like Walmart and Disney have adopted it as their primary data platform.

iii. Is Apache HUDI a database?

Ans: Apache Hudi is a transactional data lake platform that brings database and data warehouse capabilities to the data lake.

iv. How does Hudi work?

Ans: Hudi organizes a dataset into a partitioned directory structure under a basepath that is similar to a traditional Hive table. The specifics of how the data is laid out as files in these directories depend on the dataset type that you choose. You can choose either Copy on Write (CoW) or Merge on Read (MoR).

v. What does Hudi stand for?

Ans: It was developed by Uber in 2016 and became an Apache Software Foundation top-level project in 2019. The name Hudi stands for “Hadoop Upserts Deletes and Incrementals,” reflecting its ability to efficiently process data updates and deletes while supporting incremental processing.

vi. Which company owns Apache?

Ans: Apache is developed and maintained by an open community of developers under the auspices of the Apache Software Foundation. The vast majority of Apache HTTP Server instances run on a Linux distribution, but current versions also run on Microsoft Windows, OpenVMS, and a wide variety of Unix-like systems.

vii. Does Hudi use Avro?

Ans: Hudi uses Avro as the internal canonical representation for records, primarily due to its nice schema compatibility & evolution properties.

viii. What is the primary key in Hudi?

Ans: Every record in Hudi is uniquely identified by a primary key, which is a pair of record key and partition path where the record belongs to.

ix. What are the disadvantages of Apache Hudi?

Ans: The drawback of this model is that a sudden burst of writes can cause a large number of files being re-written resulting in a lot of processing. Merge on Read: In this model, when an update to a record comes in, HUDI appends it to a log for that logical data lake table.

x. What is the storage format of Apache HUDI?

Ans: Apache Hudi platform employs HFile format, to store metadata and indexes, to ensure high performance, though different implementations are free to choose their own.

Embrace the challenge of learning Apache Hudi and unlock the potential to revolutionize your big data journey. The knowledge you gain will empower you to conquer complex data landscapes and pave the way for innovation and success.